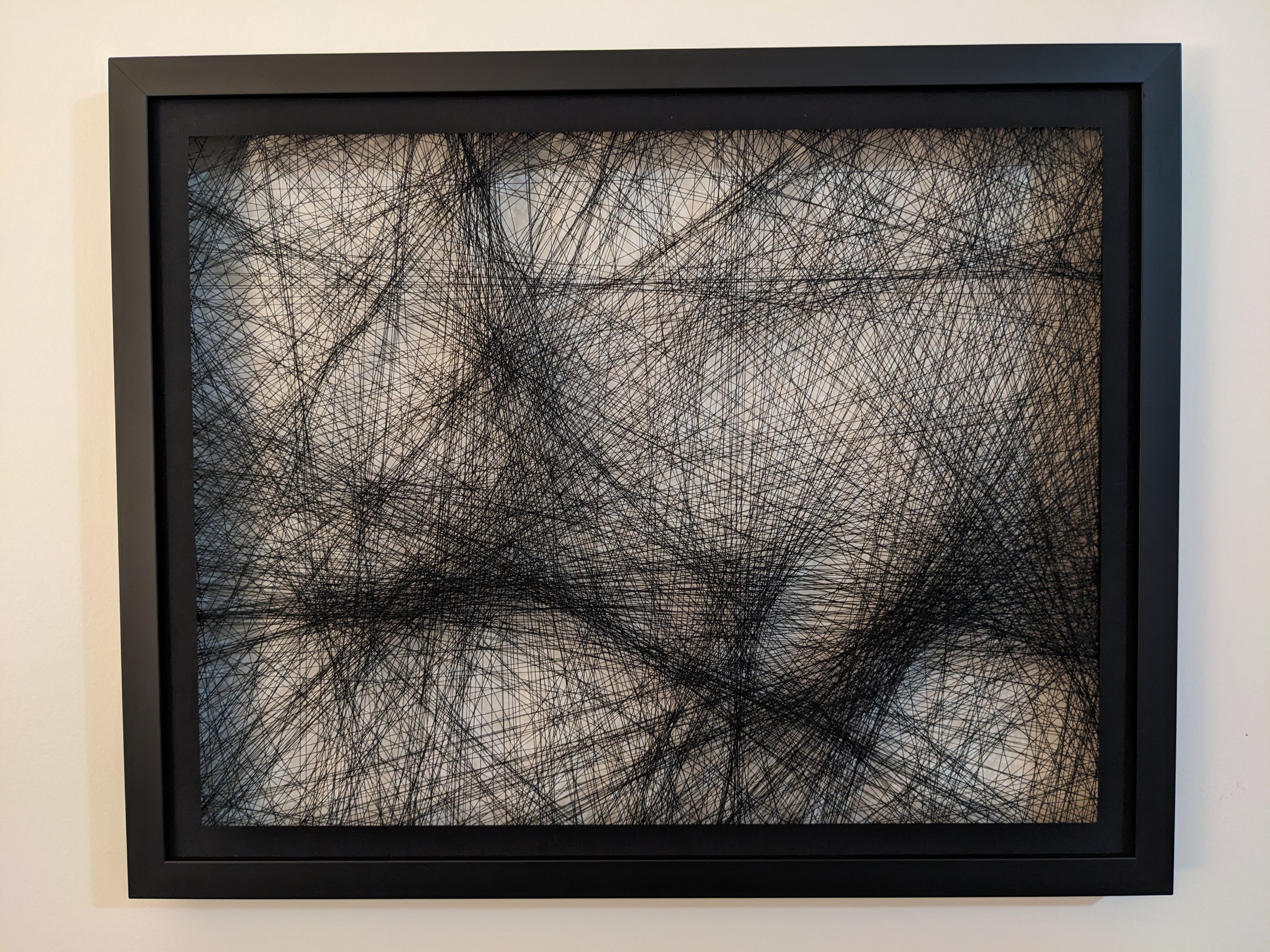

This post is a high-level overview of how I wrote code generate instructions that turns an image into instructions to make string art portraits:

Many others have done this and taken it well beyond what I do! Here are a few representative samples:

- A new way to knit (2016) by petros vrellis which also features images in color

- Polar Platform Spins Out Intricate String Art Portraits by Dan Maloney showing a simple robot to automate the threading process

- Greedy weaving_algorithm by MarginallyClever, which outlines a greedy algorithm:

- find the darkest line between any two of the 200 points around the edge of the circle.

- add that line to the output image.

- subtract that line from the input image.

- Repeat 2000 times.

- An interactive Web based string art generator by halfmonty

Compared to other algorithms, mine is distinct in a few ways:

- not just a greedy algorithm

- you can pass an extra gray-scale "mask" image to indicate which parts of the image are more important (and how much more important)

- arbitrary nail/notch locations

A simple non-greedy algorithm

Here's how a simple algorithm might work, given a list of nail positions and a target image:

- Convert target image to gray scale and blur it

- Create a list of points that form lines. For example, if

a,b, andcare points, then the list[a, b, c]represents the two lines(a, b),(b, c). - Repeatedly:

- Randomly try removing some of the points and replacing them with other points. For example, with

[a, b, c], try removingband replacing with other points likey, r,d, or(no points). - Create a blurred image of what the lines look like

- Create a blank temporary image

- Draw all the lines

- Blur the temporary image

- Compare each pixel of the temporary image against the target image and add up how different each pixel is to calculate a "difference score"

- If the "difference score" is smaller, use the newly generated points

That's it! This algorithm works surprisingly well and it guarantees that all of the lines are connected to each other, like a single long thread.

Compared to a greedy algorithm, this one is much slower but will work with any set of nails/notches since you know all of the lines will be connected.

A more flexible algorithm

In practice, the simple non-greedy algorithm tends to create lots of extra lines that don't actually contribute much to the image. By removing the constraint that each line segment must be connected to the next line, we can add a step that removes lines that contribute too little to the image. This allows us to generate an image with much less string, so it's easier to assemble.

Unfortunately, this creates a new problem of needing to connect the lines but it's easy to address: after generating a list of lines, we can greedily try to connect the lines to each other and then connect the remaining lines by adding a few new ones.

Using a mask

No matter what algorithm you use, there are always trade-offs. Drawing a line will usually make some part of your generated image more similar to your target image in some areas and more different in other areas. -- The only change to the algorithm is to multiply the brightness of the mask image by the difference of the generated and target image at each pixel. Another equivalent way to use the mask is to always multiply the mask brightness by the generated and target images before calculating their difference. Parts of the generated/target images where the mask image is are dark (close to zero) will not contribute much to the difference score

Optimizing

There are a lot of optimizations that make the non-greedy algorithm run faster! I only talk about a couple of them here:

Representing the generated image as a matrix

Drawing thousands or millions of lines can be pretty slow, at least on my CPU. A lot of the algorithm is spent redrawing the same lines over and over again since only a few are removed and a few are added each iteration. -- Instead of creating a new image and redrawing all of the lines, we can maintain 2D array (matrix) where each cell represents the number of lines that pass through that pixel. Then removing a line is as each as subtracting 1 from each cell in the matrix and drawing a line is just adding 1 to each corresponding cell.

Comparing the generated image to the target image is still expensive since you need to convert that matrix to an image but it's much faster, at least on a CPU.

Multiple Scales

Calculating the best string art on a large image can be slow but calculating it on an image with 1/2 the width and 1/2 the height is much faster, so that's what we do! As a bonus, we can recursively apply this approach to calculate string art for a smaller version of the image, then scale it up again and adjust the string art to have finer detail.

At the usual scale, e.g. 800 by 800 pixels, I assume that each string that overlaps with a pixel contributes about 0.25 (out of 1) to that pixel's darkness. In other words, the code allows for up to 4 strings to overlap a pixel and darken it but any more strings overlapping that pixel don't make the pixel any darker. For an image that's 1/4 the size (1/2 the width and 1/2 the height), we simply decrease the amount a string darkens a pixel from 1/4 to 1/16.

Future Work

- Try using a graphics library (something that uses GPU acceleration) to draw lines and do image operations